A question came up on reddit: why is my webpage being interpreted with the incorrect character encoding? The question (which has since been removed, or else I’d link to it) involved some specifics about how the page was being served, and, paraphrased, the answer was that PHP-generated pages were served with an HTTP Content-Type header which included encoding information, and static HTML pages weren’t.

But the markup included a <meta http-equiv="Content-Type"> tag. Shouldn’t the encoding have gotten interpreted correctly regardless of the Content-Type header, then? I threw off a quick, cargo-cult-tinged remark about being sure to place a <meta> tag to specifying character encoding before the <title> tag, and someone else said they thought it was interpreted anywhere in the first 128 bytes of the document.

Rather than continue to perpetrate hearsay and questionably-shiny pearls of wisdom, I’m going to try to nail down some factual observations about the behaviour of content-encoding-information-bearing <meta> tags. I want to know how the tag interacts with real HTTP headers, how it behaves when it’s placed at different offsets in the document (before rendered content? After rendered content? A long way after the start of the document?), and whether different browsers handle it differently.

tl;dr: For HTML5, avoid these issues entirely by using only UTF-8. Anything else is in violation of the current spec, so you’re throwing yourself at the mercy of browser implementations and backwards-compatibility behaviour. And place a <meta charset="utf-8"> tag somewhere near the beginning of your <head>.

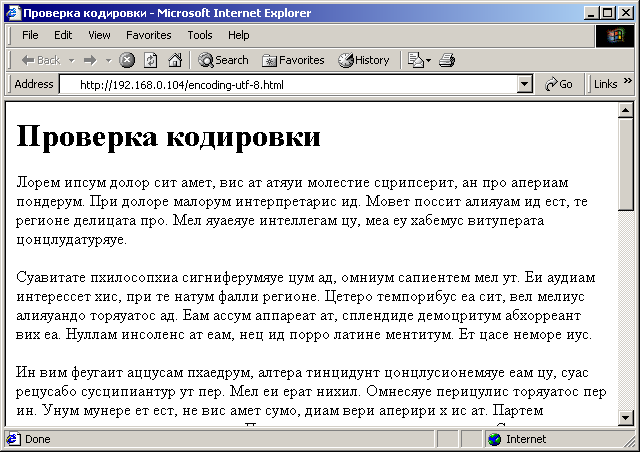

All of these tests are based on the same two test files: a 3,242 byte, Windows-1251-encoded test file:

And a 5,610 byte, UTF-8-encoded version of the same content:

The content is courtesy of a Russian Lorem ipsum generator. The original question concerned Cyrillic content encoded as Windows-1251, and that seemed to make a fine test case.

All of the variant tests were tried both as a file opened directly from disk by the browser (as a file:/// URL) and as served by a local Apache web server. The web server was set up to serve the documents with the Content-Type: text/html header, unless otherwise noted. I disabled ETags, and set the Cache-Control header to no-store. Both long-form (<meta http-equiv="Content-Type" content="text/html; charset=...">) and short-form (<meta charset="...">) <meta> tag forms were tested to confirm equivalence. (And for brevity’s sake, for the rest of the post, when I say “<meta> tag”, assume that I’m abbreviating “a <meta> tag carrying content encoding information”.)

Browsers used for testing were Firefox 80.0, Chrome 85.0.4183.83, Internet Explorer 11.1016.18362.0, and Edge 44.18362.449.0 (an EdgeHTML/pre-Chromium version, under the assumption that newer versions should behave roughly the same as Chrome).

What does the spec say?

The first, and obvious thing to understand: how is the behaviour of <meta http-equiv> tags specified? Well, in a word: barely.

MDN

If specified, the

https://developer.mozilla.org/en-US/docs/Web/HTML/Element/meta#attr-http-equivcontentattribute must have the value “text/html; charset=utf-8”. Note: Can only be used in documents served with atext/htmlMIME type — not in documents served with an XML MIME type.

I usually find MDN to be pretty useful, but in this case, half of the two-sentence definition is irrelevant (a text/html MIME type is an accepted precondition for us). The other half is interesting: it implies that UTF-8 is the only encoding you can set via a <meta> tag. This seems to me to disagree with reality, because I’m sure I’ve used it to specify an encoding other than UTF-8 at some point – but maybe that’s a case of browsers going off-spec.

Edit, 2020-09-03 11:44 UTC: Through the magic of wikis, MDN is now a little better than when I first wrote this. Would that all documentation were as easily editable!

W3C

I realize that W3C now defers to WHATWG for the HTML5 spec, but I did want to see what they used to say. The W3C wiki page on the meta element says even less about Content-Type than did MDN, but it does drop this nugget of wisdom about the general behaviour of http-equiv meta tags:

When the http-equiv attribute is specified on a meta element, the element is a pragma directive. You can use this element to simulate an HTTP response header, but only if the server doesn’t send the corresponding real header; you can’t override an HTTP header with a

https://www.w3.org/wiki/HTML/Elements/meta?meta http-equivelement.

That would explain the original problem, then: if the server has already specifically indicated a character encoding, the page can’t override it.

The W3C Wiki (or at least the HTML5 element documentation) is clearly on the way out. There’s a redirect rule in place to push visitors to MDN; you need to append a trailing ? to the URL to confuse and suppress the redirect. (Probably intentional, but still janky.)

WHATWG

The general http-equiv overview doesn’t say anything about how to handle conflicts between HTTP headers and <meta> tags. It does say:

When a

https://html.spec.whatwg.org/multipage/semantics.html#attr-meta-http-equivmetaelement is inserted into the document, if itshttp-equivattribute is present and represents one of the above states, then the user agent must run the algorithm appropriate for that state, as described in the following list:

…which sounds like the browser should prioritize the tag over any HTTP header.

The content-type section reiterates that only UTF-8 should be used. It links to another section about character encoding, which further drives home the point that the only valid encoding for HTML5 is UTF-8. That’s a much saner default than ISO-8859-1 or Windows-1252, which has led to some pretty silly mistakes over the years as other platforms moved to UTF-8-by-default while the web didn’t.

Interestingly, in an unexpected outburst of minutia, that other section also specifies that:

The element containing the character encoding declaration must be serialized completely within the first 1024 bytes of the document.

https://html.spec.whatwg.org/multipage/semantics.html#charset1024

Strange and frustrating that such an important piece of information isn’t conveyed by reference sites like MDN. If a <meta> tag defining http-equiv or charset were, say, to be alphabetically-sorted with other <head> content, it could easily be placed after some other very long tags (e.g., Open Graph tags, stylesheet <link> tags) and bumped out of that buffer.

Finally, that other section does make reference to a Content-Type HTTP header:

If an HTML document does not start with a BOM, and its encoding is not explicitly given by Content-Type metadata, and the document is not an

https://html.spec.whatwg.org/multipage/semantics.html#charsetiframesrcdocdocument, then the encoding must be specified using ametaelement with acharsetattribute or ametaelement with anhttp-equivattribute in the Encoding declaration state.

(Emphasis mine.) I don’t interpret this as meaning that the Content-Type header takes precedence over a <meta> tag if present. All this is saying is that if the header (among a few other things) isn’t present, then the <meta> tag must be. It doesn’t specify precedence if both are present.

Incidentally, when I peeked at the source of that page to get an ID for the bit about 1,024 bytes so that I could deep link to it, I found that it has an alternative ID: #charset512. Looks like the requirement used to be that the <meta> tag must fall within the first 512 bytes of the document. This was discussed in late 2010, and changed on 2011-02-09 to reflect what browsers were already doing.

Browsers still default to Windows-1252, not UTF-8

With my web server set to serve a Content-Type header of only text/html, and with no <meta> tags in either document specifying character encoding, I tried loading both versions of the document in my test browsers, both directly from disk and from the web server, to see how the content would be auto-detected:

| Browser | UTF-8 from file | Windows-1251 from file | UTF-8 over HTTP | Windows-1251 over HTTP |

|---|---|---|---|---|

| Firefox | UTF-8 | Windows-1251 | Windows-1252 | Windows-1251 |

| Chrome | UTF-8 | Windows-1251 | Windows-1252 | Windows-1251 |

| IE11 | Windows-1252 | Windows-1252 | Windows-1252 | Windows-1252 |

| Edge | Windows-1252 | Windows-1252 | Windows-1252 | Windows-1252 |

Results were inconsistent. I was surprised that Firefox and Chrome both auto-detected Windows-1251, but not UTF-8. I was also really surprised in the difference in behaviour between file and HTTP transports. I tested both ways to be able to say I did, not because I expected to see a difference! A Windows-1252 default still makes a lot of sense in some situations. There’s a lot of old content on the web which doesn’t declare a content type, and assumes it’ll be interpreted as Windows-1252. But I would expect that to be the default for documents which trigger quirks mode, not well-formed HTML5.

Internet Explorer and Edge are pretty obviously just defaulting to Windows-1252 in all cases without trying to do any auto-detection. In IE, if I pop open the “View” menu and enable “Auto-Select” from the “Encoding” menu, it correctly auto-detects all four. Edge has decided that view options and context menus are unnecessary, and I can’t find any option to change the page encoding. Outside of that, they both have some pretty weird quasi-caching behaviour. IE seems to keep page encodings around in memory: if I edit the page source to add a <meta> tag with encoding information, it will still interpret the document with that encoding across page reloads (even after removing the tag again) until I either feed in a different encoding (either by HTTP header or by <meta> tag) or restart the browser. Clearing the cache doesn’t seem to affect it one way or the other.

Edge is even worse, because it seems to cache character encodings to disk. Changes to the Content-Type HTTP header alone doesn’t bust the page cache, and neither does restarting the browser. (This is what drove me to set the Cache-Control: no-store header. Then changes at least kick in after a browser restart.) If Edge ever loads your page with the wrong encoding, you can’t rely solely on correcting the Content-Type header to fix the interpretation. The best way to straighten it out is to fix the header, and set a correct <meta> tag.

Verdict: always specify a <meta> tag with content encoding. WHATWG says to always specify your content encoding anyway, and the easiest way to make sure you do so is to do it in markup. (If you do set the HTTP header, too, then UTF-8 is the only standards-compliant option, so the HTTP header and the tag will necessarily agree.) Oh, and do it from the get-go, because legacy versions of IE/Edge will cache your mojibake for a long time.

<meta> tags can specify encodings other than UTF-8

…even if you shouldn’t.

For this test, I added a correct <meta> tag to both encodings of the page right after the opening <head> tag. With those tags in place, the table from the previous point turns all-green: all browsers interpret both encodings correctly. Use the long form of the <meta> tag and an HTML 4.01 Strict doctype and it’ll validate, too, because it’s only as of HTML5 that UTF-8 is the only valid option.

Verdict: HTML5 is only ever supposed to be UTF-8, but of course browsers have maintained backwards compatibility with old content, and for simplicity’s sake (sanity’s sake?) extend that compatibility to HTML5.

HTTP headers always win over <meta> tags

With that basic understanding in hand, let’s move on to conflict: how is a content encoding set in markup treated when the server indicates something different? For this test, I reconfigured my web server to explicitly serve a Content-Type header of text/html; charset=utf-8:

$ curl -sI http://localhost/encoding-utf-8.html HTTP/1.1 200 OK ... Content-Type: text/html; charset=utf-8

And I added <meta> tags to the beginning of the <head> elements of the HTML documents declaring their respective character encodings:

<meta charset="windows-1251">

...

<meta charset="utf-8">All of my test browsers interpreted both document as UTF-8, as instructed by the web server.

I tried again, reconfiguring the web server to explicitly specify a Windows-1251 content encoding. All of my test browsers interpreted both documents as Windows-1251.

Verdict: if the HTTP Content-Type header declares a character encoding, it always wins. Sucks that eight-year-old, deprecated, and hard-to-Google W3C documentation is the only place that correctly and explicitly calls out this behaviour. It’s also a bit unfortunate that the content can’t override the web server here (although probably not a deal-breaker if everyone’s defaulting to UTF-8 like they ought).

<meta> tags can go before or after <title>

I had somehow gotten it stuck in my head was that you should put the <meta> tag defining the content encoding before any other rendered content in the page, including <title>. Otherwise, you might end up with the body content being interpreted well, but a mangled title in the page tab, and a lot of confusion because the browser tools indicate that the page was interpreted the way you meant it to be. I think I probably got this by misremembering or misreading a sentence from Joel Spolsky’s excellent developer’s introduction to text encoding:

But that meta tag really has to be the very first thing in the <head> section because as soon as the web browser sees this tag it’s going to stop parsing the page and start over after reinterpreting the whole page using the encoding you specified.

“The Absolute Minimum Every Software Developer Absolutely, Positively Must Know About Unicode and Character Sets (No Excuses!)”

For this test, I added a correct <meta> tag to both encodings of the page, and tried placing them before and after the <title> tags. All browsers interpreted the titles of both versions of the both encodings correctly.

For fun, I reapplied the HTML 4.01 Strict doctype and long-form <meta> tagsfrom the second test, and tried this test on Firefox 3.5 (a 9-year-old browser) and Internet Explorer 5 (a 21-year-old one). Neither one of them cared about where the <meta> tag was placed in relation to the <title>, either, displaying the documents correctly both ways.

Verdict: it doesn’t matter if the <meta> tag is before or after the <title>, and it never did. But you probably should put it first to avoid wasting compute time, however tiny.

<meta> tags can start in the first 1,024 bytes

WHATWG specifies that if you’re going to put encoding information in markup, it should should be wholly located within the first 1,024 bytes of the file. Do browsers actually enforce this cutoff? Do they fall back to default encoding interpretation if it’s not in that range?

For this test, I relocated the <meta> tag to different places in the document, and observed how the encoding ended up being interpreted. I intentionally specified an incorrect content encoding in the <meta> tag so that I could differentiate between its value being interpreted correctly and the default encoding detection being correct. For all of these, the desired insertion point ended up in the middle of a paragraph, so I closed that element and reopened it again after the <meta> tag. I used a hex editor to confirm my offsets in bytes, not codepoints. The results were the same for both transports and encodings.

| Browser | Last accepted <meta> position |

|---|---|

| Firefox | Any (without visibly reload: ending at 1,024) |

| Chrome | Starting at 1,024 |

| IE11 | Any |

| Edge | Any |

Note, again, that placing <meta> elements of any sort outside of the <head> is not standards-compliant, so if leopards eat your face, well, you asked for it.

Firefox does visibly rerender the entire page if any part of the tag is outside of the first 1,024 bytes. And you’ll get a notice like this in the console:

The page was reloaded, because the character encoding declaration of the HTML document was not found when prescanning the first 1024 bytes of the file. The encoding declaration needs to be moved to be within the first 1024 bytes of the file.

You’ll get a brief FOUC-ish stutter while the page is rerendered in the new encoding (particularly noticeable with the UTF-8 version of the file being served over HTTP, for some reason).

IE and Edge both handled the encoding information from the <meta> tag seamlessly, regardless of where it was on the page. No visible reload, even after padding the file out past 32 KB in length.

Chrome is a weird outlier here. It’s like they sort of paid attention to the spec by doing something about a boundary at 1,024 bytes, but it’s for the wrong end of the tag! Even if only the opening < of the <meta> tag falls within the first 1,024 bytes of the file, Chrome will handle it. The other browsers will handle it anywhere, including after the closing </html>.

Verdict: Try to be a little careful to keep your <meta> tag toward the beginning of the <head>, especially if you have a lot of long Open Graph or description tags (you early-’00s SEO monster, you). It’s not like it’s a small window to hit, but I have seen some awful WordPress template headers where it’d be possible to fall on the wrong side of it. Also, if you do want to play with fire, make sure you count bytes, not characters.

As an aside, 1,024 seems like a weird number for this. Yes, it’s in keeping with the time-honoured tradition of buffer sizes being powers of two, but I think something closer to a common Internet MTU (1,500 bytes, or maybe 1,492) would be a more justifiable limit. But I guess if you start down that road then what you’re really trying to say is that you want the character encoding to be located somewhere in the first response packet to the request, and then you need to start thinking about the size of the response HTTP headers, and it turns into a whole ball of wax. “Within 1,024” probably ends up being in the first packet most of the time, anyway.

Conclusion

My main takeaway is that it’s still pretty easy to run afoul of encoding issues even though we live in what should be a UTF-8-by-default world. It was hard enough to shake out some of this behaviour when I had full control over both the web server configuration and the page markup, and had some idea of what I was doing.

It seems like the <meta> tag is still pretty important. I think, though, so is adhering to the standards (where they’re intelligible). Most of what I’ve poked at in this post are edge-cases of the spec, if not complete violations of it. Stick to UTF-8 content, make sure you’ve got a <meta> tag in place, and you should be okay.